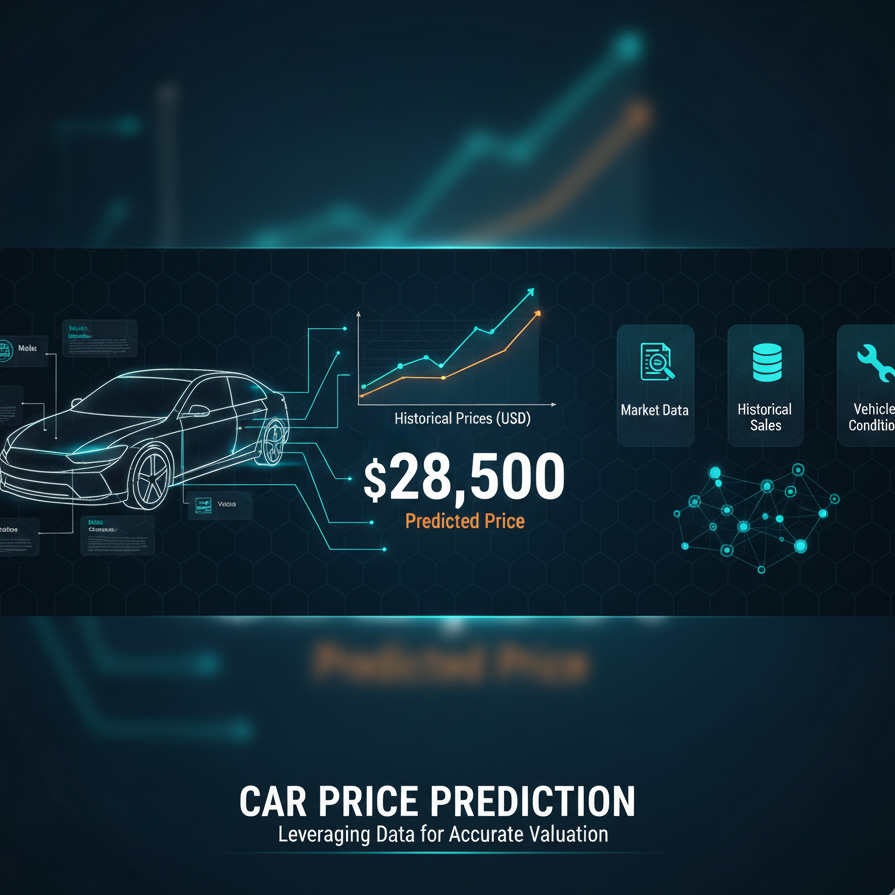

Car Price Prediction

October 12, 2025

Car Price Prediction

Overview

This repository outlines a theoretical blueprint for building a machine learning system that predicts used car prices from historical listings. It focuses on the why and how rather than implementation details—useful for planning, documentation, and stakeholder alignment.

Objectives

- Primary: Predict market-consistent resale prices for used cars.

- Secondary: Identify price drivers (e.g., make, model, mileage, age, condition).

- Business Value: Better pricing guidance, inventory optimization, and transparent valuation.

Problem Definition

- Type: Supervised regression.

- Target Variable:

price(continuous, currency). - Inputs (examples):

- Categorical:

make,model,trim,fuel_type,transmission,drivetrain,body_type,seller_type,state/region. - Numeric:

odometer,year,engine_displacement,horsepower,mpg/efficiency,owners_count,accidents_count. - Temporal:

listing_date,first_registration_date. - Textual (optional):

listing_description(to extract condition flags). - Images (optional): vehicle photos (for future multimodal extensions).

- Categorical:

Data Considerations

- Sources: Public listings, dealer feeds, auctions, OEM data, valuation guides.

- Sampling: Ensure coverage across brands, years, and regions; avoid oversampling popular models only.

- Leakage Watchouts: Remove fields not known at listing time (e.g., “sold_price”, “days_to_sell”).

- Privacy & Licensing: Respect data licenses and PII rules; store only necessary attributes.

Data Quality & Preprocessing

- Cleaning: Standardize units (miles vs. km), fix outliers (e.g., 1e7 miles), handle malformed years.

- Missing Values: Impute with domain-aware strategies (e.g., median odometer by age/segment); keep missingness indicators.

- Outliers: Cap or winsorize extreme prices and mileage; consider model-robust loss (Huber) in later stages.

- Deduplication: Remove duplicate VINs/listings where appropriate.

- Temporal Split: Use time-aware splits to mimic future forecasting conditions.

Feature Engineering

- Domain Features:

age = listing_year - model_yearusage_intensity = odometer / age(mi/yr or km/yr)- Price-depreciation interactions (e.g.,

make × age,segment × mileage)

- Categoricals: Target encoding or one-hot (depending on cardinality). Guard against leakage with nested CV for target encoding.

- Geography: Region-level demand indices, fuel price levels, climate proxies (salt/road wear).

- Condition Signals: From text (keywords like “accident-free”, “new tires”) turned into binary flags.

- Seasonality: Month or quarter; promo periods.

- Market Dynamics: Rolling-average market price for a

(make, model, trim, age)cell excluding current listing (leakage-safe).

Modeling Approach

- Baseline: Median price by

(make, model, year); and a simple linear regression onage+odometer. - Interpretable Models: Linear/Elastic Net with interactions; Generalized Additive Models (GAM) for smooth effects.

- Nonlinear Models: Gradient Boosted Trees (XGBoost/LightGBM/CatBoost) to capture complex interactions and monotonic constraints (e.g., price should decrease as mileage increases).

- Model Selection Philosophy:

- Start with baselines for sanity.

- Use tree ensembles as strong tabular learners.

- Consider hybrid: interpretable core + residual booster.

- Regularization/Constraints: Monotonicity (age ↑ → price ↓; mileage ↑ → price ↓), fairness-aware constraints if applicable.

Validation Strategy

- Time-Aware CV: Rolling-origin or blocked time splits to simulate forecasting on unseen months.

- Group-Aware CV: Group by

VINor(make, model)to avoid leakage across splits when duplicates/near-duplicates exist. - Robustness Checks: Evaluate across segments (luxury vs. economy), regions, ages to ensure consistent performance.

Metrics

- Primary: MAE (currency-scale, interpretable average error).

- Secondary: RMSE (penalizes large misses), MAPE (use with caution; unstable near zero), Median AE (robust to outliers).

- Business KPIs: % within ±X% of actual; pricing hit rate; margin impact.

Error Analysis

- Stratified Residuals: Slice errors by

make,age,mileage,region, and season. - Undervaluation/Overvaluation Patterns: Identify cohorts with systemic bias (e.g., hybrids in cold regions).

- Data Gaps: Models often struggle on rare trims/low-sample vintages; consider hierarchical priors or shrinkage.

Bias, Fairness, and Risk

- Sensitive Attributes: Avoid using protected classes. Audit indirect proxies (ZIP codes).

- Fairness Checks: Compare errors by geography, seller type, and vehicle segment to avoid systematic disadvantage.

- Market Shifts: Monitor drift (e.g., fuel price spikes, policy changes affecting EV resale).

- Ethics: Provide confidence intervals and explanations; avoid overstating certainty.

Model Explainability

- Global: Feature importance, partial dependence, SHAP global summaries.

- Local: Per-listing SHAP attributions, monotonic constraints to align with domain logic.

- Documentation: Record assumptions, known limitations, and intended use.

Production & MLOps (Conceptual)

- Versioning: Dataset snapshots, feature definitions, model artifacts with semantic versions.

- Monitoring: Track MAE drift, data schema drift, feature range violations, and segment-level performance.

- Retraining Cadence: Time-based (e.g., monthly) and event-driven (detected drift).

- A/B Testing: Compare current model vs. challenger on live traffic with guardrails.

Reproducibility

- Determinism: Fixed random seeds, pinned dependency versions.

- Pipelines: Modular steps for ingest → clean → feature → train → evaluate.

- Metadata: Log configs, metrics, and data hashes per run for auditability.

Project Structure (Proposed)

docs/— methodology notes, data dictionary, error-analysis reportsdata_spec/— schemas, validation rules, feature contractsexperiments/— experiment definitions, results summariesmodels/— model cards (rationale, metrics, caveats)monitoring/— theoretical monitoring plans, alert thresholdsroadmap.md— planned improvements and study questions

(No code included in this repository as this is theory-only.)

Roadmap

- Establish baselines and monotonic Elastic Net.

- Add gradient boosting with leakage-safe encodings.

- Build robust time-aware validation and error slicing.

- Introduce explainability reports per segment.

- Deploy conceptual monitoring & drift detection plan.

- Explore multimodal signals (images/text) if data permits.

Limitations

- Results depend on data coverage and quality; rare trims/years remain challenging.

- Economic shocks can invalidate patterns; require frequent retraining and monitoring.

- Text/image features add value but increase complexity and governance needs.

References (Conceptual)

- Tabular ML best practices (regularization, leakage control, CV design)

- Interpretable ML resources (monotonic models, GAMs)

- Model risk management guidelines for applied ML (documentation, monitoring)

License

This theoretical documentation is provided for educational and planning purposes. Adapt as needed for your organization’s policies and data governance.